Is Artificial Intelligence Magic?

Artificial intelligence can perform feats that seem like sorcery. AI can drive cars and fly drones. It can compose original music, write poetry that isn’t too awful, and design recipes that do sound awful (blueberry and spinach pizza, anyone?).

AI can do some things better than humans: lip reading, diagnosing diseases such as pneumonia and some cancers, transcribing speech, and playing Jeopardy!, Go, Texas Hold ’em, and a variety of video games. AI software can even learn to make its own AI software.

It’s almost quaint to mention science fiction writer Arthur C. Clarke’s famous adage, “Any sufficiently advanced technology is indistinguishable from magic.” Yet to the majority of people who do not understand AI’s machinations, these technologies may as well be wizardry.

We want to go one step further and argue that artificial intelligence is not merely indistinguishable from magic, it actually invokes elements of magic, anthropologically speaking, and is driven by magical thinking.

So, what is magic?

Magic can be a slippery concept: arcane, obscure, and all too readily dismissed by those who live in supposedly scientific and enlightened societies. The late anthropologist Alfred Gell wrote that magic “has not disappeared but has become more diverse and difficult to identify.”

As Gell explained, magic infuses both technology and art. Many people associate the term “technology” with digital innovations such as smartphones and the internet. But medicines, food storage systems, houses, clothes, musical instruments, printing, writing, and even language are all technologies.

To Gell, technologies are ways of achieving results that might otherwise prove elusive or unattainable. An ax is a technology for dismembering animals, cutting down trees to build shelter or carve a path, or attacking and defending. A flute is a “psychological weapon” whose purpose is to enchant and play upon our emotions, thus combining technology, artistry, and magic.

AI is not an “advanced technology” in the same way as nuclear fission, lab-grown meat, hyperloops, or anything else on the bleeding edge of possibility. It’s little more than a dense thicket of math, calculated quickly. So how can math be magic?

In anthropology, magic tends to involve two elements:

-

- Manipulating symbols (through incantations, music, drawings, writing, and utterances, for example) to bring about some physical change in the world.

- Obtaining some ideal product or outcome without any cost or effort.

Math and artificial intelligence also manipulate symbols—numbers, letters, and computer code—to bring about change. In artificial intelligence, you take one thing (a set of inputs, such as crime statistics), manipulate those symbols in obscure and obfuscatory ways, and transform them into another thing (an output, such as guidance on where to deploy police forces and which groups of people to target).

Recently, we have begun to stack up countless examples of how the digital manipulation of symbols alters people’s behaviors, feelings, and experiences.

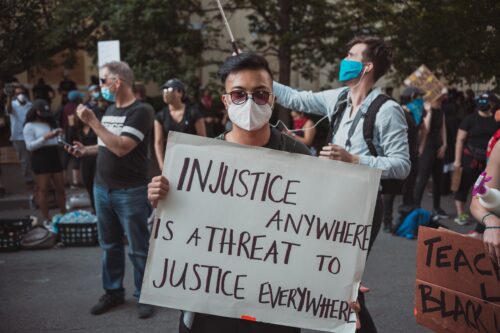

Using “predictive policing,” law enforcement and researchers plug big data into a computer, and an algorithm predicts who is most likely to commit a crime. This affects the people police officers target for arrest and intervention, and has real consequences, especially for those who are already marginalized. Algorithms are also influencing how judges hand down sentences.

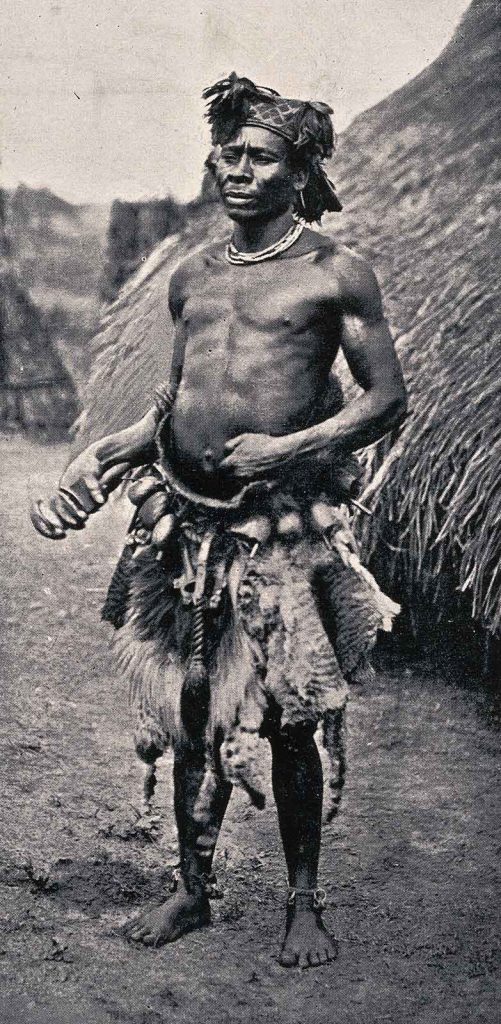

A similar magical manipulation of symbols can be found in witchcraft and divination. In North-Central Africa, Zande witch doctors traditionally fed poisonous vegetable extracts (an extreme kind of input) to a chicken. They then determined the answers to questions of justice through an equally extreme output: the bird’s survival or death. This method, widely practiced before the colonial period, helped Zande chiefs and judges hand down sentences.

Practitioners of folk magic have used effigies and poppets (dolls) in an attempt to change people’s lives from a distance. Tech companies use computer code to change people’s lives around the world. For example, Facebook created a tool that uses artificial intelligence to map disaster-struck areas, directing responders to hidden roads so they can deliver aid. And Uber’s use of facial recognition technology is impacting transgender people’s ability to earn a living.

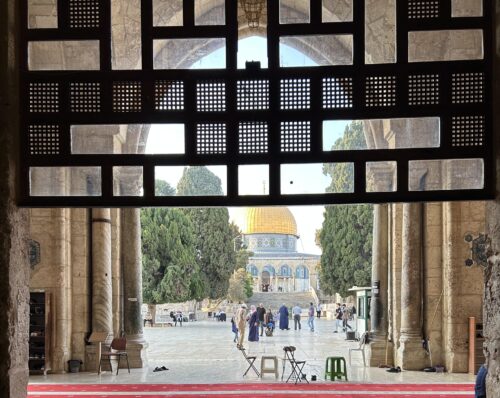

In some cultures, oracles cast cowrie shells, rub boards, poke branches into termite mounds, or scry into crystal balls and reflective surfaces in order to divine the truth and make decisions. In modern health care, computerized neural networks can automatically diagnose common conditions, helping doctors make decisions that change and even save people’s lives.

Anthropologist M.C. Elish and technology researcher danah boyd argue that any simplistic equating of AI with magic risks diminishing the sheer amounts of labor, knowledge, and physical resources required in the production of many AI systems. Yet, while magic can be about production without cost or effort, it is also about ideals and how we, as flawed and constrained humans, might attain these ideals. To better understand how this applies to artificial intelligence, we can draw links to another “magical” endeavor: art.

Art, as prized in many post-Enlightenment societies, is almost ineffable—a separate and transcendent realm of originality, creativity, and inspiration elevated to a quasi-religious status. As comic book writer (and magician) Alan Moore said, “To cast a spell is simply to spell, to manipulate words, to change people’s consciousness, and this is why I believe that an artist or writer is the closest thing in the contemporary world to a shaman.”

But in societies without the segregated and lofty institution of “fine art,” art is a technical activity requiring not merely knowledge, skill, and work, but also mechanical virtuosity.

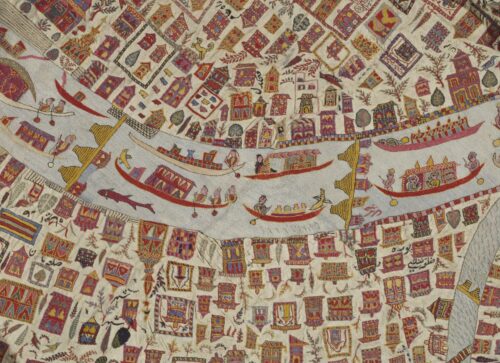

For example, the people of the Trobriand Islands in Papua New Guinea carve magnificent and fierce patterns onto the prow-boards of their canoes that are designed to intimidate and overwhelm all who see the vessels approaching. The magic—the enchantment, the psychological manipulation—comes not just from the object itself but also from the technical prowess required to make it.

Similarly, the development of artificial intelligence systems requires technical skill and also some artistry. Engineers must see patterns in complex information, visualize the architecture of an AI system, choose which data are relevant, specify the statistical models, and creatively solve problems. It’s no wonder that tribes of Silicon Valley engineers are microdosing hallucinogens to nurture and promote the free flow of creative ideas and insights.

It is this creativity—or, as Gell puts it, the “role of ‘magical’ ideas”—that causes technology to constantly change. “Technical innovations occur,” he wrote, “not as the result of attempts to supply wants but in the course of attempts to realize technical feats heretofore considered ‘magical.’”

The ultimate example is a concept in AI called “the singularity”: an “awake” and superhumanly intelligent computer. This potential entity would be capable of manipulating symbols to “think up” and build new AI programs that themselves would bring about changes in the world. It would be the ideal intelligence, the last intelligence we ever need to create, because it would no longer require our skill, knowledge, or labor. Creating the singularity would be, for all intents and purposes, a magical endeavor.