Race Is Real, But It’s Not Genetic

A friend of mine with Central American, Southern European, and West African ancestry is lactose intolerant. Drinking milk products upsets her stomach, and so she avoids them. About a decade ago, because of her low dairy intake, she feared that she might not be getting enough calcium, so she asked her doctor for a bone density test. He responded that she didn’t need one because “blacks do not get osteoporosis.”

My friend is not alone. The view that black people don’t need a bone density test is a longstanding and common myth. A 2006 study in North Carolina found that out of 531 African American and Euro-American women screened for bone mineral density, only 15 percent were African American women—despite the fact that African American women made up almost half of that clinical population. A health fair in Albany, New York, in 2000, turned into a ruckus when black women were refused free osteoporosis screening. The situation hasn’t changed much in more recent years.

Meanwhile, FRAX, a widely used calculator that estimates one’s risk of osteoporotic fractures, is based on bone density combined with age, sex, and, yes, “race.” Race, even though it is never defined or demarcated, is baked into the fracture risk algorithms.

Let’s break down the problem.

First, presumably based on appearances, doctors placed my friend and others into a socially defined race box called “black,” which is a tenuous way to classify anyone.

Race is a highly flexible way in which societies lump people into groups based on appearance that is assumed to be indicative of deeper biological or cultural connections. As a cultural category, the definitions and descriptions of races vary. “Color” lines based on skin tone can shift, which makes sense, but the categories are problematic for making any sort of scientific pronouncements.

Second, these medical professionals assumed that there was a firm genetic basis behind this racial classification, which there isn’t.

Third, they assumed that this purported racially defined genetic difference would protect these women from osteoporosis and fractures.

The view that black people don’t need a bone density test is a longstanding and common myth.

Some studies suggest that African American women—meaning women whose ancestry ties back to Africa—may indeed reach greater bone density than other women, which could be protective against osteoporosis. But that does not mean “being black”—that is, possessing an outward appearance that is socially defined as “black”—prevents someone from getting osteoporosis or bone fractures. Indeed, this same research also reports that African American women are more likely to die after a hip fracture. The link between osteoporosis risk and certain racial populations may be due to lived differences such as nutrition and activity levels, both of which affect bone density.

But more important: Geographic ancestry is not the same thing as race. African ancestry, for instance, does not tidily map onto being “black” (or vice versa). In fact, a 2016 study found wide variation in osteoporosis risk among women living in different regions within Africa. Their genetic risks have nothing to do with their socially defined race.

When medical professionals or researchers look for a genetic correlate to “race,” they are falling into a trap: They assume that geographic ancestry, which does indeed matter to genetics, can be conflated with race, which does not. Sure, different human populations living in distinct places may statistically have different genetic traits—such as sickle cell trait (discussed below)—but such variation is about local populations (people in a specific region), not race.

Like a fish in water, we’ve all been engulfed by “the smog” of thinking that “race” is biologically real. Thus, it is easy to incorrectly conclude that “racial” differences in health, wealth, and all manner of other outcomes are the inescapable result of genetic differences.

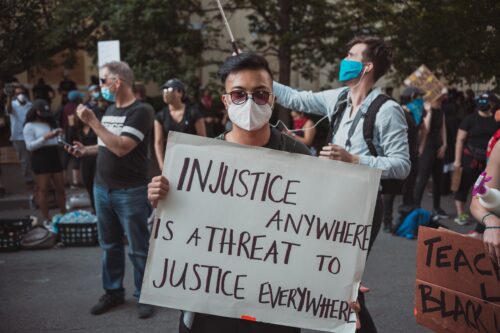

The reality is that socially defined racial groups in the U.S. and most everywhere else do differ in outcomes. But that’s not due to genes. Rather, it is due to systemic differences in lived experience and institutional racism.

Communities of color in the United States, for example, often have reduced access to medical care, well-balanced diets, and healthy environments. They are often treated more harshly in their interactions with law enforcement and the legal system. Studies show that they experience greater social stress, including endemic racism, that adversely affects all aspects of health. For example, babies born to African American women are more than twice as likely to die in their first year than babies born to non-Hispanic Euro-American women.

As a professor of biological anthropology, I teach and advise college undergraduates. While my students are aware of inequalities in the life experiences of different socially delineated racial groups, most of them also think that biological “races” are real things. Indeed, more than half of Americans still believe that their racial identity is “determined by information contained in their DNA.”

For the longest time, Europeans thought that the sun revolved around the Earth. Their culturally attuned eyes saw this as obvious and unquestionably true. Just as astronomers now know that’s not true, nearly all population geneticists know that dividing people into races neither explains nor describes human genetic variation.

Yet this idea of race-as-genetics will not die. For decades, it has been exposed to the sunlight of facts, but, like a vampire, it continues to suck blood—not only surviving but causing harm in how it can twist science to support racist ideologies. With apologies for the grisly metaphor, it is time to put a wooden stake through the heart of race-as-genetics. Doing so will make for better science and a fairer society.

In 1619, the first people from Africa arrived in Virginia and became integrated into society. Only after African and European bond laborers unified in various rebellions did colony leaders recognize the “need” to separate laborers. “Race” divided indentured Irish and other Europeans from enslaved Africans, and reduced opposition by those of European descent to the intolerable conditions of enslavement. What made race different from other prejudices, including ethnocentrism (the idea that a given culture is superior), is that it claimed that differences were natural, unchanging, and God-given. Eventually, race also received the stamp of science.

Over the next decades, Euro-American natural scientists debated the details of race, asking questions such as how often the races were created (once, as stated in the Bible, or many separate times), the number of races, and their defining, essential characteristics. But they did not question whether races were natural things. They reified race, making the idea of race real by unquestioning, constant use.

In the 1700s, Carl Linnaeus, the father of modern taxonomy and someone not without ego, liked to imagine himself as organizing what God created. Linnaeus famously classified our own species into races based on reports from explorers and conquerors.

The race categories he created included Americanus, Africanus, and even Monstrosus (for wild and feral individuals and those with birth defects), and their essential defining traits included a biocultural mélange of color, personality, and modes of governance. Linnaeus described Europeaus as white, sanguine, and governed by law, and Asiaticus as yellow, melancholic, and ruled by opinion. These descriptions highlight just how much ideas of race are formulated by social ideas of the time.

In line with early Christian notions, these “racial types” were arranged in a hierarchy: a great chain of being, from lower forms to higher forms that are closer to God. Europeans occupied the highest rungs, and other races were below, just above apes and monkeys.

So, the first big problems with the idea of race are that members of a racial group do not share “essences,” Linnaeus’ idea of some underlying spirit that unified groups, nor are races hierarchically arranged. A related fundamental flaw is that races were seen to be static and unchanging. There is no allowance for a process of change or what we now call evolution.

There have been lots of efforts since Charles Darwin’s time to fashion the typological and static concept of race into an evolutionary concept. For example, Carleton Coon, a former president of the American Association of Physical Anthropologists, argued in The Origin of Races (1962) that five races evolved separately and became modern humans at different times.

One nontrivial problem with Coon’s theory, and all attempts to make race into an evolutionary unit, is that there is no evidence. Rather, all the archaeological and genetic data point to abundant flows of individuals, ideas, and genes across continents, with modern humans evolving at the same time, together.

A few pundits such as Charles Murray of the American Enterprise Institute and science writers such as Nicholas Wade, formerly of The New York Times, still argue that even though humans don’t come in fixed, color-coded races, dividing us into races still does a decent job of describing human genetic variation. Their position is shockingly wrong. We’ve known for almost 50 years that race does not describe human genetic variation.

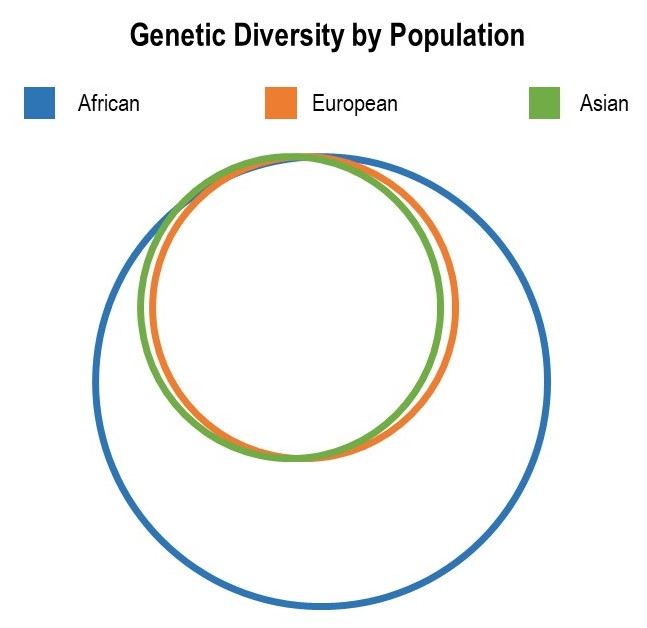

In 1972, Harvard evolutionary biologist Richard Lewontin had the idea to test how much human genetic variation could be attributed to “racial” groupings. He famously assembled genetic data from around the globe and calculated how much variation was statistically apportioned within versus among races. Lewontin found that only about 6 percent of genetic variation in humans could be statistically attributed to race categorizations. Lewontin showed that the social category of race explains very little of the genetic diversity among us.

Furthermore, recent studies reveal that the variation between any two individuals is very small, on the order of one single nucleotide polymorphism (SNP), or single letter change in our DNA, per 1,000. That means that racial categorization could, at most, relate to 6 percent of the variation found in 1 in 1,000 SNPs. Put simply, race fails to explain much.

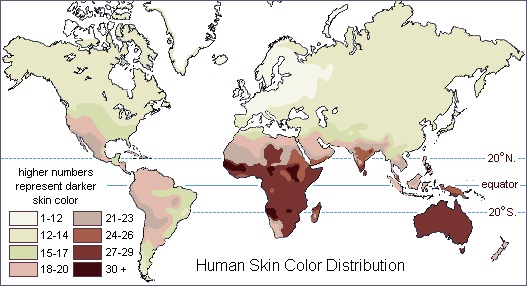

In addition, genetic variation can be greater within groups that societies lump together as one “race” than it is between “races.” To understand how that can be true, first imagine six individuals: two each from the continents of Africa, Asia, and Europe. Again, all of these individuals will be remarkably the same: On average, only about 1 out of 1,000 of their DNA letters will be different. A study by Ning Yu and colleagues places the overall difference more precisely at 0.88 per 1,000.

The researchers further found that people in Africa had less in common with one another than they did with people in Asia or Europe. Let’s repeat that: On average, two individuals in Africa are more genetically dissimilar from each other than either one of them is from an individual in Europe or Asia.

Homo sapiens evolved in Africa; the groups that migrated out likely did not include all of the genetic variation that built up in Africa. That’s an example of what evolutionary biologists call the founder effect, where migrant populations who settle in a new region have less variation than the population where they came from.

Genetic variation across Europe and Asia, and the Americas and Australia, is essentially a subset of the genetic variation in Africa. If genetic variation were a set of Russian nesting dolls, all of the other continental dolls pretty much fit into the African doll.

What all these data show is that the variation that scientists—from Linnaeus to Coon to the contemporary osteoporosis researcher—think is “race” is actually much better explained by a population’s location. Genetic variation is highly correlated to geographic distance. Ultimately, the farther apart groups of people are from one another geographically, and, secondly, the longer they have been apart, can together explain groups’ genetic distinctions from one another. Compared to “race,” those factors not only better describe human variation, they invoke evolutionary processes to explain variation.

Those osteoporosis doctors might argue that even though socially defined race poorly describes human variation, it still could be a useful classification tool in medicine and other endeavors. When the rubber of actual practice hits the road, is race a useful way to make approximations about human variation?

When I’ve lectured at medical schools, my most commonly asked question concerns sickle cell trait. Writer Sherman Alexie, a member of the Spokane-Coeur d’Alene tribes, put the question this way in a 1998 interview: “If race is not real, explain sickle cell anemia to me.”

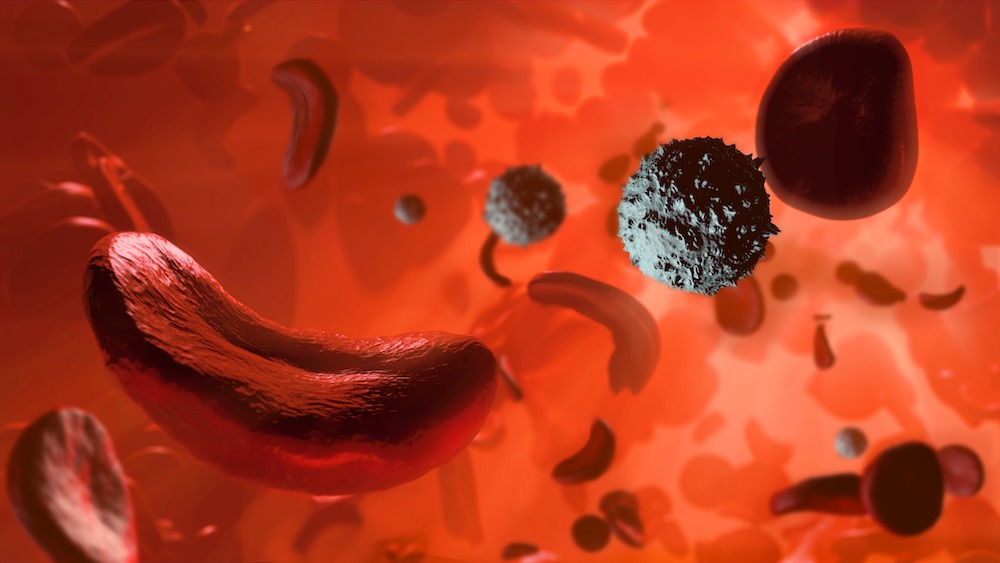

OK! Sickle cell is a genetic trait: It is the result of an SNP that changes the amino acid sequence of hemoglobin, the protein that carries oxygen in red blood cells. When someone carries two copies of the sickle cell variant, they will have the disease. In the United States, sickle cell disease is most prevalent in people who identify as African American, creating the impression that it is a “black” disease.

Yet scientists have known about the much more complex geographic distribution of sickle cell mutation since the 1950s. It is almost nonexistent in the Americas, most parts of Europe and Asia—and also in large swaths of Northern and Southern Africa. On the other hand, it is common in West-Central Africa and also parts of the Mediterranean, Arabian Peninsula, and India. Globally, it does not correlate with continents or socially defined races.

In one of the most widely cited papers in anthropology, American biological anthropologist Frank Livingstone helped to explain the evolution of sickle cell. He showed that places with a long history of agriculture and endemic malaria have a high prevalence of sickle cell trait (a single copy of the allele). He put this information together with experimental and clinical studies that showed how sickle cell trait helped people resist malaria, and made a compelling case for sickle cell trait being selected for in those areas. Evolution and geography, not race, explain sickle cell anemia.

What about forensic scientists: Are they good at identifying race? In the U.S., forensic anthropologists are typically employed by law enforcement agencies to help identify skeletons, including inferences about sex, age, height, and “race.” The methodological gold standards for estimating race are algorithms based on a series of skull measurements, such as widest breadth and facial height. Forensic anthropologists assume these algorithms work.

The origin of the claim that forensic scientists are good at ascertaining race comes from a 1962 study of “black,” “white,” and “Native American” skulls, which claimed an 80–90 percent success rate. That forensic scientists are good at telling “race” from a skull is a standard trope of both the scientific literature and popular portrayals. But my analysis of four later tests showed that the correct classification of Native American skulls from other contexts and locations averaged about two incorrect for every correct identification. The results are no better than a random assignment of race.

That’s because humans are not divisible into biological races. On top of that, human variation does not stand still. “Race groups” are impossible to define in any stable or universal way. It cannot be done based on biology—not by skin color, bone measurements, or genetics. It cannot be done culturally: Race groupings have changed over time and place throughout history.

Science 101: If you cannot define groups consistently, then you cannot make scientific generalizations about them.

Wherever one looks, race-as-genetics is bad science. Moreover, when society continues to chase genetic explanations, it misses the larger societal causes underlying “racial” inequalities in health, wealth, and opportunity.

To be clear, what I am saying is that human biogenetic variation is real. Let’s just continue to study human genetic variation free of the utterly constraining idea of race. When researchers want to discuss genetic ancestry or biological risks experienced by people in certain locations, they can do so without conflating these human groupings with racial categories. Let’s be clear that genetic variation is an amazingly complex result of evolution and mustn’t ever be reduced to race.

Similarly, race is real, it just isn’t genetic. It’s a culturally created phenomenon. We ought to know much more about the process of assigning individuals to a race group, including the category “white.” And we especially need to know more about the effects of living in a racialized world: for example, how a society’s categories and prejudices lead to health inequalities. Let’s be clear that race is a purely sociopolitical construction with powerful consequences.

Race is real, it just isn’t genetic. It’s a culturally created phenomenon.

It is hard to convince people of the dangers of thinking race is based on genetic differences. Like climate change, the structure of human genetic variation isn’t something we can see and touch, so it is hard to comprehend. And our culturally trained eyes play a trick on us by seeming to see race as obviously real. Race-as-genetics is even more deeply ideologically embedded than humanity’s reliance on fossil fuels and consumerism. For these reasons, racial ideas will prove hard to shift, but it is possible.

Over 13,000 scientists have come together to form—and publicize—a consensus statement about the climate crisis, and that has surely moved public opinion to align with science. Geneticists and anthropologists need to do the same for race-as-genetics. The recent American Association of Physical Anthropologists’ Statement on Race & Racism is a fantastic start.

In the U.S., slavery ended over 150 years ago and the Civil Rights Law of 1964 passed half a century ago, but the ideology of race-as-genetics remains. It is time to throw race-as-genetics on the scrapheap of ideas that are no longer useful.

We can start by getting my friend—and anyone else who has been denied—that long-overdue bone density test.

This article was republished on discovermagazine.com.